This is an old revision of the document!

Tested with Cram v0.4.0, ROS version: Kinetic, Ubuntu 16.04

Bullet world demonstration

This tutorial demonstrates how the internal belief state of the robot is represented in CRAM, how to update the world, how to perform specialized reasoning in it and how to use lightweight simulation for quick testing of plans.

This tutorial assumes that you have a basic understanding of how the belief state is implemented internally. You can read about this in the documentation entry for geometric reasoning. You can find the code of this tutorial on Github.

Installing the projection project

As the system we will be working with will get quite complex things might not go as expected. If you get into trouble while following this tutorial feel free to consult the Support page or contact the person who last edited this tutorial, you can find the username in the footer.

If you followed the installation instructions for the full CRAM on the Installation page, you should be ready to go.

Environment setup

In order to be able to demonstrate many different features of the belief state representation we will set up the environment of the robot similar to how it is done for the real system: we will need an occupancy map for navigation, an IK solver for manipulation, a semantic map of the environment with the furniture etc.

First, let's set up the environment in our terminal by calling the launch file. It already brings up a roscore from within:

$ roslaunch cram_bullet_world_tutorial world.launch

Let's look into that launchfile.

$ roscd cram_bullet_world_tutorial $ cat launch/world.launch

The occupancy map is for navigating the robot around furniture. The kitchens URDF represents it as if the kitchen were an actual robot whose state can change. Its joint states indicate specific points in the kitchen, which change if we, for example, open a drawer. Mainly, the joint states are for visualisation of important places of the kitchen in rviz (see the picture in section Objects in the world). The semantic map is used to reason about places in the kitchen using location designators, that we talk about in the chapter Abstract entity descriptions.

To check if the kitchen URDF was uploaded to the ROS parameter server we can execute the following:

$ rosparam get /kitchen_description | awk 'NR == 5'

Besides all the kitchen information also the PR2 URDF is loaded:

$ rosparam get /robot_description | awk 'NR == 5' # read the fifth line of the URDF

To simulate simple grasping tasks with the PR2 robot in the bullet-world we need an inverse kinematics solver for the arms. In the launch file those are the pr2_arm_kinematics package nodes.

If you retrieve data from the rosparam get commands, we can get going.

REPL setup

Now, let's load the package in the REPL (start the REPL with $ roslisp_repl):

CL-USER> (ros-load:load-system "cram_bullet_world_tutorial" :cram-bullet-world-tutorial) CL-USER> (in-package :cram-bullet-world-tutorial)

As we will be talking to the ROS master, let's first start a node:

BTW-TUT> (roslisp:start-ros-node "bullet_world")

First, let's do all the initializations:

BTW-TUT> (cram-occupancy-grid-costmap::init-occupancy-grid-costmap) ; Inits the occupancy map for navigation. BTW-TUT> (cram-bullet-reasoning-belief-state::ros-time-init) ; Resets the simulated time. BTW-TUT> (cram-location-costmap::location-costmap-vis-init) ; Inits publishing of markers in RViz. BTW-TUT> (cram-tf::init-tf) ; Inits the TF listener object.

The functions above initialize and clean up the environment of the REPL. Currently, we call those functions per hand for educational purposes. However, there exists a function that does the initialization automatically and spawns the completely set up visualization window. It will be described later.

The way CRAM is designed is that the belief state (the Bullet world) is only updated through Prolog queries, as we consider the belief state to be “robot knowledge” that we can query and that can get things asserted to it, and we try to manipulate the knowledge only through Prolog and not through Lisp directly. To learn more about using Prolog in CRAM you can quickly go through the Prolog tutorial.

A new Bullet world instance is created when we load the cram-bullet-reasoning ASDF system (which is the dependency of our tutorial package). It is stored in the *current-bullet-world* variable, which we can access through the following Prolog query:

BTW-TUT> (prolog:prolog '(btr:bullet-world ?world))

Bullet world initialization

To be able to see what is going on in the belief state and to ease the debugging process we visualize the current world instance with OpenGL:

BTW-TUT> (prolog:prolog '(and (btr:bullet-world ?world) (btr:debug-window ?world)))

Please note, that the visualization window does not necessarily need to be open when the robot is operating and using its belief state, in fact, it should be closed to save processing power.

The “bullet visualization” window that appears should be empty at the moment. Let's spawn some objects.

The first thing we need is the floor plane:

BTW-TUT> (prolog:prolog '(and (btr:bullet-world ?world) (assert (btr:object ?world :static-plane :floor ((0 0 0) (0 0 0 1)) :normal (0 0 1) :constant 0))))

This assert query must not be confused with the Common Lisp test function assert. To show you the origin of this assert query you can look up its definition. Since it is not a function but a Prolog fact you must search with rgrep in the cram_3d_world/cram_bullet_reasoning directory:

BTW-TUT> Alt-x rgrep RET (<- (assert RET *.lisp RET /path/to/your/workspace/src/cram_3d_world/cram_bullet_reasoning/src/ RET

There are quite a few definitions here, serving various queries. All of them have in common, that they change something in the world. The one we are reaching by the previous call is this one, located in world-facts.lisp:

(<- (assert (object ?world ?object-type ?name ?pose . ?args)) (assert ?world (object ?object-type ?name ?pose . ?args)))

In the assert query, the :static-plane is the type of the object we're spawning (it can be :static-plane, :sphere, :box, :mesh, :urdf etc.), :floor is the name of the object, ( (0 0 0) (0 0 0 1) ) is the coordinate.

Via the launch file at the beginning of this tutorial the robot URDF representation has been uploaded to the ROS parameter server under the name robot_description. Let's load it into the world:

BTW-TUT> (let ((robot-urdf (cl-urdf:parse-urdf (roslisp:get-param "robot_description")))) (prolog:prolog `(and (btr:bullet-world ?world) (cram-robot-interfaces:robot ?robot) (assert (btr:object ?world :urdf ?robot ((0 0 0) (0 0 0 1)) :urdf ,robot-urdf)) (cram-robot-interfaces:robot-arms-parking-joint-states ?robot ?joint-states) (assert (btr:joint-state ?world ?robot ?joint-states)) (assert (btr:joint-state ?world ?robot (("torso_lift_joint" 0.15d0)))))))

The line (roslisp:get-param “robot_description”) gets the URDF description of the robot as a string.

(cl-urdf:parse-urdf …) then parses this string into a cl-urdf:robot object.

(cram-robot-interfaces:robot ?robot) predicate reads the name of the robot that was specified in a robot description Lisp package. For the PR2 it is the cram_pr2_description package, which defines the robot name as pr2, so ?robot gets bound to pr2.

This line:

(assert (btr:object ?world :urdf ?robot ((0 0 0) (0 0 0 1)) :urdf ,robot-urdf))

spawns the robot.

Keep in mind, that spawning a robot in the world creates a new object. The first :urdf defines the type of the created object, while the second is used as a keyword to provide the objects urdf.

Then we read out the hard-coded values for robot arm parking states, where the arms are not in the way, and assert those joint states to our PR2 object of the Bullet world.

Finally, let's spawn the kitchen environment (it will take some time as we query KnowRob for the semantic environment map):

BTW-TUT> (let ((kitchen-urdf (cl-urdf:parse-urdf (roslisp:get-param "kitchen_description")))) (prolog:prolog `(and (btr:bullet-world ?world) (assert (btr:object ?world :semantic-map :kitchen ((0 0 0) (0 0 0 1)) :urdf ,kitchen-urdf)))))

In the visualization window you can now see the kitchen loaded from the URDF with the semantic map information attached to it. If you want to have only a visualization of the semantic map without using the URDF, you just need to skip passing the URDF parameter: (assert (btr:object ?world :semantic-map my-no-urdf-kitchen ( (0 0 0) (0 0 0 1))), but as having two representations of the semantic map at the same time in the world can lead to undefined behavior and we would have to clean the world and build it up again, let's just stick to the semantic map with coupled URDF.

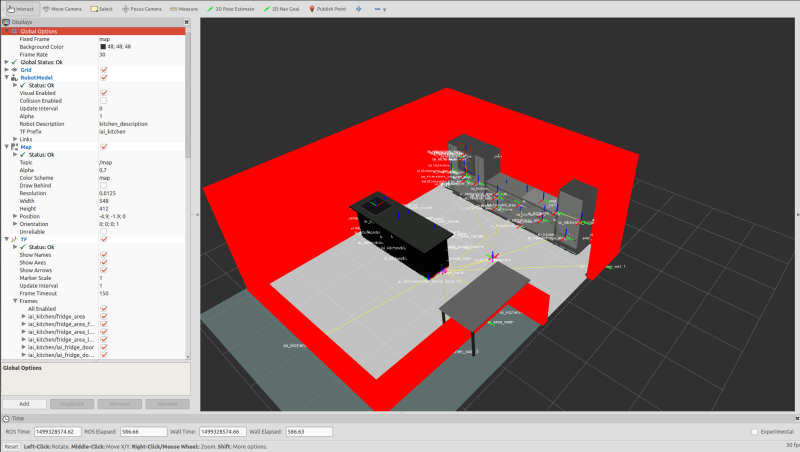

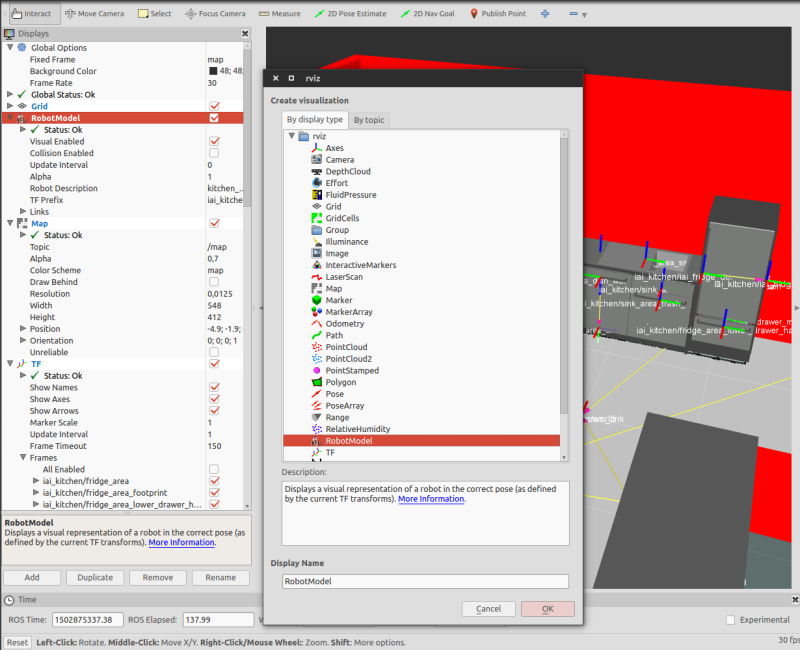

You can see the semantic map in rviz as well. Open up a terminal and type “rosrun rviz rviz” or just “rviz”. Tweak your setting as shown in the image below.

If there are parameters missing (left box) you can add them using the 'Add' button below. Take the robot model of the kitchen for example:

To see the URDF of the kitchen in RViz you need its description name 'kitchen_description' and the TF prefix 'iai_kitchen'. In the launchfile 'world.launch' you can see, that the name of the kitchen URDF is remapped from 'robot_description' (the default) to 'kitchen_description', which is the name you need in your RViz configuration. When you open the robot model parameter, you have two checkboxes for 'Visual Enabled' and 'Collision Enabled'. The first shows a detailed mesh of each component in the kitchen, the latter shows the collision objects, which are by far less detailed. We use collision objects when navigating the PR2, since the calculation of potential collisions would be very expensive when using visual meshes.

When you are ready with the robot model, look at the 'TF' visualization in RViz. Like the robot model it tells you if any poses can't be resolved, e.g. when their parent link is not published in TF. Open up the 'Tree' parameter of 'TF' (left panel of RViz). This is a tree representing all the TF poses with their respective parents and children. If you change the pose of a frame, all of its children will change poses as well.

As you can see, this environment visualizes all the TF poses we can use in our tutorial. Get back to the debug window (Bullet visualization window) to see the actual meshes of the objects. The debug window only shows the collision meshes, not the detailed visuals, since we want to keep the bullet world fast and lightweight and concentrate on collisions not visuals.

The environment is now initialized. For convenience, there exists a function that will execute all of the above in one call. If you call it now it will reinitialize everything, which is also fine:

BTW-TUT> (roslisp-utilities:startup-ros)

This starts a ROS node and executes the ROS initialization functions, which are registered in CRAM through roslisp-utilities:register-ros-init-function. You can see which functions are registered by executing the following:

BTW-TUT> (alexandria:hash-table-keys roslisp-utilities::*ros-init-functions*)

You should find all of the functions that we manually called above and one additional, the (init-projection) function, which initializes the Bullet world with the robot and the kitchen environment.

Manipulating the kitchen

Make sure you have spawned your kitchen from urdf, not the semantic map. Check the section above for spawning the kitchen. Let us inspect the kitchen now:

BTW-TUT> (btr:object btr:*current-bullet-world* :kitchen)

If needed, change the name of ':kitchen' to the name you used when spawning the kitchen. This call should give you a robot-object into the REPL. Inspect it by right-clicking on the urdf-semantic-map-object and choosing Inspect. You can look into all its joints and states, how they are linked and in what state they are (press L to go up in the inspector). Now we are about to change one of those joints, causing the kitchen to open the door of the fridge.

BTW-TUT> (btr:set-robot-state-from-joints '(("iai_fridge_door_joint" 0.3d0)) (btr:object btr:*current-bullet-world* :kitchen))

If you did everything right, the fridge door should now be slightly open. Check the other joints and change them as you wish. Watch their limits though.

Spawn and change objects in the world

From this point on let us use the fast initialization function to start the bullet-world:

BTW-TUT> (roslisp-utilities:startup-ros)

Now let us spawn some household objects:

BTW-TUT> (prolog:prolog '(and (btr:bullet-world ?world) (assert (btr:object ?world :mesh mug-1 ((0 0 2) (0 0 0 1)) :mass 0.2 :color (1 0 0) :mesh :mug))))

There appears a mug above the robot's head. We can also move objects:

BTW-TUT> (prolog:prolog '(and (btr:bullet-world ?world) (assert (btr:object-pose ?world mug-1 ((-1 1 1) (0 0 0 1))))))

Now the mug is above the kitchen island table.

To get the instance of the spawned Bullet object there is a function btr:object:

BTW-TUT> (btr:object btr:*current-bullet-world* 'mug-1)

We can get the pose of that object through the function btr:pose:

BTW-TUT> (btr:pose (btr:object btr:*current-bullet-world* 'mug-1)) #<CL-TRANSFORMS:POSE #<3D-VECTOR (-1.0d0 1.0d0 1.0d0)> #<QUATERNION (0.0d0 0.0d0 0.0d0 1.0d0)>>

Geometric reasoning

Our mug is floating in the air. The belief state world in CRAM is static, in order to apply the physics to it we use the Bullet physics engine to simulate the world for a certain amount of time.

BTW-TUT> (prolog:prolog '(and (btr:bullet-world ?world) (btr:simulate ?world 10)))

This simulates the world for 1 second and the mug falls onto the table.

We can also ask if the scene is stable or, if we simulate the world for longer time, would objects change positions:

BTW-TUT> (prolog:prolog '(and (btr:bullet-world ?world) (btr:stable ?world))) (((?WORLD . ...)))

This copies our current world and simulates it for a certain amount of time and compares the poses of objects before and after

simulation. If the result we got is (NIL) or anything else except NIL then the mug is stably standing on the table.

Now, let's ask if the mug is currently visible for the robot:

BTW-TUT> (prolog:prolog '(and (btr:bullet-world ?world) (cram-robot-interfaces:robot ?robot) (btr:visible ?world ?robot mug-1))) NIL

Let's move PR2 such that the cup is in its visibility range:

BTW-TUT> (prolog:prolog '(and (btr:bullet-world ?world) (assert (btr:object-pose ?world cram-pr2-description:pr2 ((0.5 1 0) (0 0 1 0))))))

and ask the visibility query again: instead of typing, put the cursor where you would type and press Ctrl-Up. This will find previously executed commands.

Abstract entity descriptions

Now let's generate a distribution of locations from which the robot would be able to see the mug. For that we create an abstract location description that we call a designator. The abstract description gets grounded into specific geometric coordinates with the reference function.

BTW-TUT> (let* ((mug-pose (btr:pose (btr:object btr:*current-bullet-world* 'mug-1))) (?mug-pose-stamped (cl-transforms-stamped:pose->pose-stamped "map" 0 mug-pose)) (?mug-location-designator (desig:a location (pose ?mug-pose-stamped))) (to-see-designator (desig:a location (type visible) (for pr2) (location ?mug-location-designator)))) (desig:reference to-see-designator)) #<CL-TRANSFORMS-STAMPED:POSE-STAMPED FRAME-ID: "map", STAMP: 0.0 #<3D-VECTOR (-0.2800002098083496d0 1.6999998092651367d0 0.0d0)> #<QUATERNION (0.0d0 0.0d0 -0.9338500185757329d0 0.3576648470371437d0)>>

We create two symbolic descriptions: one is for the pose that we want to perceive, which we parse to a pose-stamped, and the other one is a pose for the robot from where it would be able to see the mug.

We can also completely abstract away from geometric poses (we used the mug-pose variable which was a quantitative value) by describing the mug as an object standing on the table called the “kitchen_island” in the URDF. This will be equivalent to telling the robot: “go stand in a position such that you would be able to perceive a mug standing on the kitchen island table top”. Please note, that we do not explicitly specify which mug we are searching for, we just say that somewhere on the table there should be a mug.

BTW-TUT> (let* ((?on-counter (desig:a location (on "CounterTop") (name "iai_kitchen_kitchen_island_counter_top"))) (?the-object (desig:an object (type mug) (at ?on-counter))) (location-to-see (desig:a location (type visible) (for pr2) (object ?the-object)))) (desig:reference location-to-see)) #<CL-TRANSFORMS-STAMPED:POSE-STAMPED FRAME-ID: "map", STAMP: 0.0 #<3D-VECTOR (-0.07000017166137695d0 1.8199996948242188d0 0.0d0)> #<QUATERNION (0.0d0 0.0d0 -0.9072884056004601d0 0.42050891674609586d0)>>

Here, as we didn't specifically say which pose we are trying to perceive: the robot randomly samples one pose from all the poses on the kitchen island table and generates a distribution to perceive that pose, i.e., it looks in the general direction of the table. If you look at the debug window now you can see an area that looks like a part of a Gaussian bell near the table. We can use that pose to move the robot there.

BTW-TUT> (btr-utils:move-robot *)

Grasp objects

We need a clean environment for this, so let's clean the world:

BTW-TUT> (roslisp-utilities:startup-ros)

From now on we will use the utility functions from cram_bullet_reasoning_utilities package, to save time on writing lengthy Prolog queries.

We need an object to grasp. For that we will use a mesh of a bottle loaded from the resources subdirectory of the tutorial.

BTW-TUT> (add-objects-to-mesh-list)

Then we spawn an object of type bottle and move it onto the table.

BTW-TUT> (btr-utils:spawn-object 'bottle-1 :bottle) BTW-TUT> (btr-utils:move-object 'bottle-1 (cl-transforms:make-pose (cl-transforms:make-3d-vector -2 -0.9 0.83) (cl-transforms:make-identity-rotation)))

Lastly we simulate the world for 10 seconds to make sure, nothing moves unexpectedly on runtime.

BTW-TUT> (btr:simulate btr:*current-bullet-world* 100)

Before we grasp the bottle, let's first prepare the PR2, just three steps: move the arms out of sight, navigate the base in front of the bottle and look at the bottle. The point we want the base to navigate to can be hard coded and saved temporarily.

BTW-TUT> (setf ?grasp-base-pose (cl-transforms-stamped:make-pose-stamped "map" 0.0 (cl-transforms:make-3d-vector -1.8547d0 -0.381d0 0.0d0) (cl-transforms:axis-angle->quaternion (cl-transforms:make-3d-vector 0 0 1) (/ pi -2))))

The same thing can be done with the point we want to look at.

BTW-TUT> (setf ?grasp-look-pose (cl-transforms-stamped:make-pose-stamped "base_footprint" 0.0 (cl-transforms:make-3d-vector 0.65335d0 0.076d0 0.758d0) (cl-transforms:make-identity-rotation)))

To execute any plan in CRAM, we need a top-level context. Besides that we also use a macro to specify that the demo should be executed in simulation, not on the real robot. Putting that together we end up with this plan:

BTW-TUT> (proj:with-projection-environment pr2-proj::pr2-bullet-projection-environment (cpl:top-level (cpl:par (pr2-pp-plans::park-arms) (exe:perform (desig:a motion (type going) (target (desig:a location (pose ?grasp-base-pose))))) (exe:perform (desig:a motion (type looking) (target (desig:a location (pose ?grasp-look-pose))))))))

We can execute the arm movement, navigation and head tilt in parallel, since each of them uses different joints of the robot. That's what cpl:par is for. The function park-arms performs a motion on the joints of both arms, which brings them into a specific position, so they don't hang around the field of view. The base navigation is achieved by the motion designator trying to reach our ?grasp-base-pose. Looking at our target works the same way.

To grasp the bottle we need to have its pose in the room. Therefore, we first perceive it and store the result in *perceived-object*:

BTW-TUT> (defvar *perceived-object* nil "Object designator returned from perception") (proj:with-projection-environment pr2-proj::pr2-bullet-projection-environment (cpl:top-level (setf *perceived-object* (pr2-pp-plans::perceive (desig:an object (type bottle))))))

With that resulting perceived object we perform the picking up action. With the torso so far down we might not be able to reach for the bottle, so we need to also push the torso up:

(proj:with-projection-environment pr2-proj::pr2-bullet-projection-environment (cpl:top-level (let ((?perceived-bottle-desig *perceived-object*)) (exe:perform (desig:a motion (type moving-torso) (joint-angle 0.3))) (exe:perform (desig:an action (type picking-up) (object ?perceived-bottle-desig) (arm right))))))

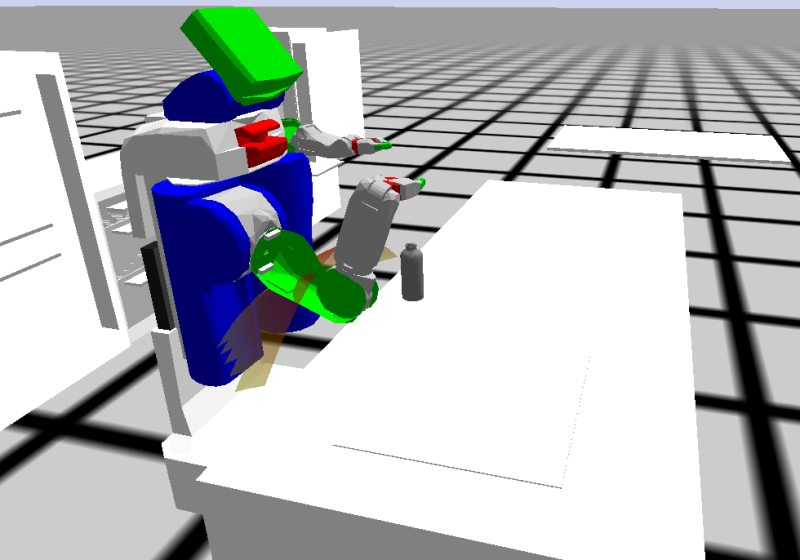

Now the PR2 should have the bottle in his right gripper.

To see which action designator is used for picking up the object we can use a grep search. Use “Alt-x”, type rgrep for full-text search, hit enter, then use “:type :picking-up” as search string. The code we are looking for is in cram_pr2/cram_pr2_pick_place_plans/src/pick-place-designators.lisp. Here the action designator is broken down to atomic action designators, that will be resolved depending on the hardware that we choose to run on, either the simulation or the real robot.

Place objects

To place things held in the gripper we first need an object attached to a gripper. Use the previous chapter Grasp objects to prepare the robot appropriately. The easiest way to place down an object is by calling the code below. It will put the bottle down to its original position.

BTW-TUT> (proj:with-projection-environment pr2-proj::pr2-bullet-projection-environment (let ((?perceived-bottle-desig *perceived-object*)) (cpl:top-level (exe:perform (desig:an action (type placing) (object ?perceived-bottle-desig) (arm right))))))

But if we want to place the bottle somewhere else we need to define those locations. Use hard-coded poses for now, since dynamic plans require massive failure handling, which will be discussed in a later chapter. Pick up the bottle again.

BTW-TUT> (proj:with-projection-environment pr2-proj::pr2-bullet-projection-environment (cpl:top-level (let ((?perceived-bottle-desig *perceived-object*)) (exe:perform (desig:a motion (type moving-torso) (joint-angle 0.3))) (exe:perform (desig:an action (type picking-up) (object ?perceived-bottle-desig) (arm right))))))

Now move the robot to the other table. We use a motion designator for that, which needs a specific pose.

BTW-TUT> (proj:with-projection-environment pr2-proj::pr2-bullet-projection-environment (cpl:top-level (exe:perform (let ((?pose (cl-tf:make-pose-stamped cram-tf:*robot-base-frame* 0.0 (cl-transforms:make-3d-vector -0.15 2.0 0) (cl-transforms:make-quaternion 0.0d0 0.0d0 -1.0d0 0.0d0)))) (desig:a motion (type going) (target (desig:a location (pose ?pose))))))))

This seems like a good spot to place the bottle. Let's put it down to coordinates defined in ?drop-pose:

BTW-TUT> (proj:with-projection-environment pr2-proj::pr2-bullet-projection-environment (cpl:top-level (let ((?perceived-object-desig *perceived-object*) (?drop-pose (cl-transforms-stamped:make-pose-stamped "map" 0.0 (cl-transforms:make-3d-vector -0.8d0 2.0d0 0.9d0) (cl-transforms:make-identity-rotation)))) (exe:perform (desig:an action (type placing) (arm right) (object ?perceived-object-desig) (target (desig:a location (pose ?drop-pose))))))))

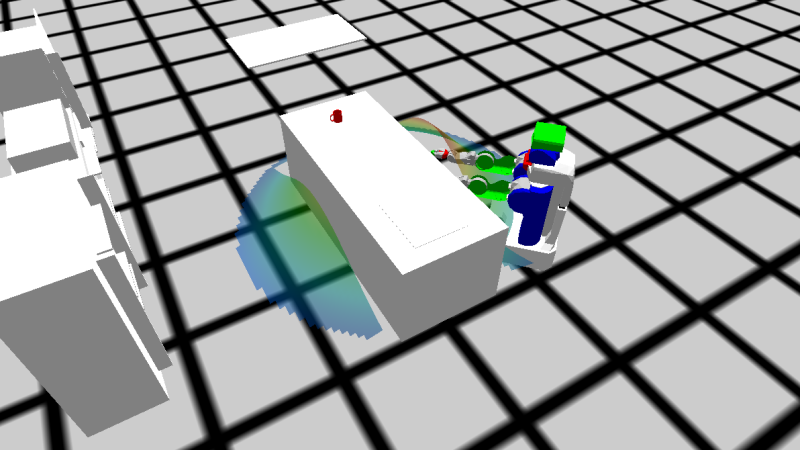

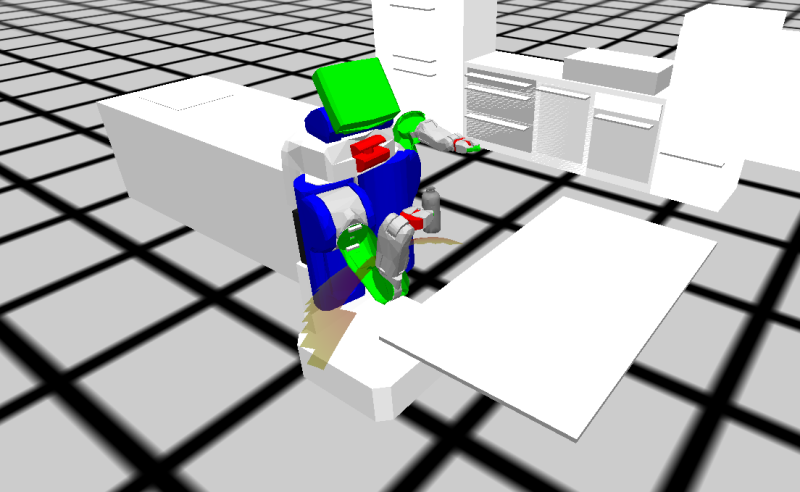

With these actions you can pick up objects, move the robot and place them at specific points. The scene should now look like this:

Construct plans

The goal of this chapter is to write a plan, that switches the position of two objects in the world. First we want to have a clean environment:

BTW-TUT> (init-projection)

If you just started your Emacs or loaded the package don't forget to add our extra meshes from resource, otherwise you won't be able to spawn a bottle.

BTW-TUT> (add-objects-to-mesh-list)

Now we need those two objects in the world.

BTW-TUT> (prolog:prolog `(and (btr:bullet-world ?world) (assert (btr:object ?world :mesh bottle-1 ((-2 -0.9 0.861667d0) (0 0 0 1)) :mass 0.2 :color (1 0 0) :mesh :bottle)) (assert (btr:object ?world :mesh bottle-2 ((-0.65 2 0.955) (0 0 0 1)) :mass 0.2 :color (0 1 0) :mesh :bottle)) (btr:simulate ?world 100)))

To make the plan shorter we can define some parameters a priori. The *pose-meal-table* is the pose, where the pr2 should stand, when he approaches the green bottle. The other pose, *pose-meal-counter* is the base pose for the counter table, when we want to place the bottle.

BTW-TUT> (defparameter *pose-bottle-1* (cl-transforms-stamped:make-pose-stamped "map" 0.0 (cl-transforms:make-3d-vector -2 -0.9d0 0.86d0) (cl-transforms:make-identity-rotation))) (defparameter *pose-bottle-2* (cl-transforms-stamped:make-pose-stamped "map" 0.0 (cl-transforms:make-3d-vector -0.8 2 0.9) (cl-transforms:make-identity-rotation))) (defparameter *pose-meal-table* (cl-tf:make-pose-stamped "map" 0.0 (cl-tf:make-3d-vector -0.15 2.0 0) (cl-tf:make-quaternion 0.0d0 0.0d0 -1.0d0 0.0d0))) (defparameter *pose-counter* (cl-transforms-stamped:make-pose-stamped "map" 0.0 (cl-transforms:make-3d-vector -1.8547d0 -0.381d0 0.0d0) (cl-transforms:axis-angle->quaternion (cl-transforms:make-3d-vector 0 0 1) (/ pi -2))))

Now we define the whole procedure. We go to the counter and pick up the first bottle. Then go to the meal table, pick up the other bottle and place the first bottle on the same spot. After that we go back to the counter and place the second bottle. You should define each of the small steps as separate functions, so your top-level plan stays readable.

(defun spawn-two-bottles () (unless (assoc :bottle btr::*mesh-files*) (add-objects-to-mesh-list)) (prolog:prolog `(and (btr:bullet-world ?world) (assert (btr:object ?world :mesh bottle-1 ((-2 -0.9 0.860) (0 0 0 1)) :mass 0.2 :color (1 0 0) :mesh :bottle)) (assert (btr:object ?world :mesh bottle-2 ((-0.8 2 0.9) (0 0 0 1)) :mass 0.2 :color (0 1 0) :mesh :bottle)) (btr:simulate ?world 100)))) (defun navigate-to (?navigation-goal) (exe:perform (desig:a motion (type going) (target (desig:a location (pose ?navigation-goal)))))) (defun look-at (?point-of-interest) (exe:perform (desig:a motion (type looking) (target (desig:a location (pose ?point-of-interest)))))) (defun get-perceived-bottle-desig () (let* ((?bottle-desig (desig:an object (type bottle))) (?perceived-bottle-desig (exe:perform (desig:a motion (type detecting) (object ?bottle-desig))))) ?perceived-bottle-desig)) (defun pick-up (?object-designator &optional (?arm :right)) (exe:perform (desig:an action (type picking-up) (arm ?arm) (object ?object-designator)))) (defun place-down (?pose ?object ?arm) (exe:perform (desig:an action (type placing) (arm ?arm) (object ?object) (target (desig:a location (pose ?pose))))))

Here we go. Now we can use those functions to write down the top-level plan.

BTW-TUT> (defun test-switch-two-bottles () (spawn-two-bottles) (proj:with-projection-environment pr2-proj::pr2-bullet-projection-environment (cpl:top-level ;; Go to counter top and perceive bottle (let ((?navigation-goal *pose-counter*) (?ptu-goal (cl-transforms-stamped:make-pose-stamped "base_footprint" 0.0 (cl-transforms:make-3d-vector 0.65335d0 0.076d0 0.758d0) (cl-transforms:make-identity-rotation)))) (cpl:par ;; Move torso up (exe:perform (desig:a motion (type moving-torso) (joint-angle 0.3))) (pr2-pp-plans::park-arms) (navigate-to ?navigation-goal)) (look-at ?ptu-goal)) ;; Pick up bottle-1 with right arm. (let ((?perceived-bottle-1 (get-perceived-bottle-desig))) (pick-up ?perceived-bottle-1 :right) (pr2-pp-plans::park-arms :arm :right) ;; Move to the meal table (let ((?pose *pose-meal-table*)) (navigate-to ?pose)) ;; Pick up bottle-2 with left arm (let ((?perceived-bottle-2 (get-perceived-bottle-desig))) (pick-up ?perceived-bottle-2 :left) ;; Move left arm out of sight (pr2-pp-plans::park-arms :arm :left) ;; Place bottle-1 on second table (let ((?drop-pose *pose-bottle-2*)) (place-down ?drop-pose ?perceived-bottle-1 :right)) ;; Move right arm out of sight (pr2-pp-plans::park-arms :arm :right) ;; Move to the counter table (let ((?navigation-goal *pose-counter*)) (navigate-to ?navigation-goal)) ;; Place bottle-2 on the counter (let ((?drop-pose *pose-bottle-1*)) (place-down ?drop-pose ?perceived-bottle-2 :left)) (pr2-pp-plans::park-arms))))))

Each time you run test-switch-two-bottles you first need to reset the world with (init-projection). You can include the call at the top of the test function, but maybe you want to comment some parts out to see how the plan behaves.

The package cram_bullet_reasoning_utilities has a number of utility functions to make the rapid prototyping with the Bullet world faster and easier, there are functions such as spawn-object, move-object, kill-all-objects, move-robot etc. that execute the Prolog queries with default values of parameters that are not important, e.g. default colors for objects.

By pressing . while holding Alt (Alt-.) while the Emacs cursor is on the function name you will be redirected to the definition of the function to see what exactly it does. Alt-, brings back the previous window. Try this for init-projection, for example.